How Close Are We to Catastrophic Climate Change? Plus, The World is Made of Tail Risk, and Is AI Making us Dumber?

I’m Umair Haque, and this is The Issue: an independent, nonpartisan, subscriber-supported publication. Our job is to give you the freshest, deepest, no-holds-barred insight about the issues that matter most.

New here? Get the Issue in your inbox daily.

Quick Links and Fresh Thinking

- America’s Year of Living Dangerously (Project Syndicate)

- Who bears the risk? (Aeon)

- Hire factcheckers to fight election fake news, EU tells tech firms (The Guardian)

- Global warming and heat extremes to enhance inflationary pressures (Springer Nature)

- The 6 most common leadership styles & how to find yours (IMD)

Hi! How’s everyone? I hope you’re all well. Today, we’re going to discuss some emerging research that’s really striking. Beginning with…

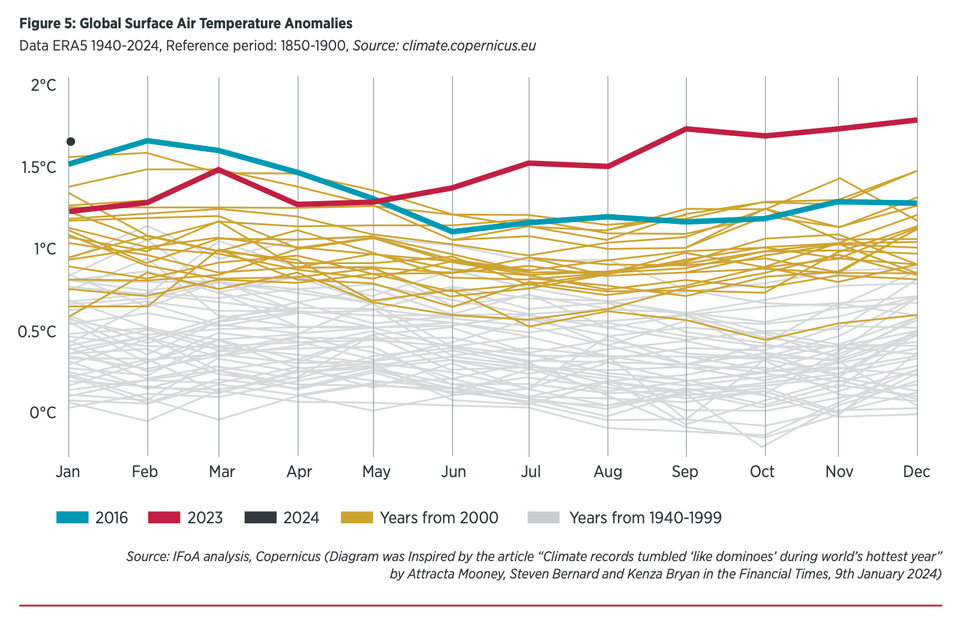

Some of the most cutting-edge thinking in the world today about climate change comes from Britain’s Institute of Actuaries. We discussed their recent report which concluded that climate change could destroy up to half our economies by 2070. The next report in that series has just come out, and if anything, it’s even…scarier.

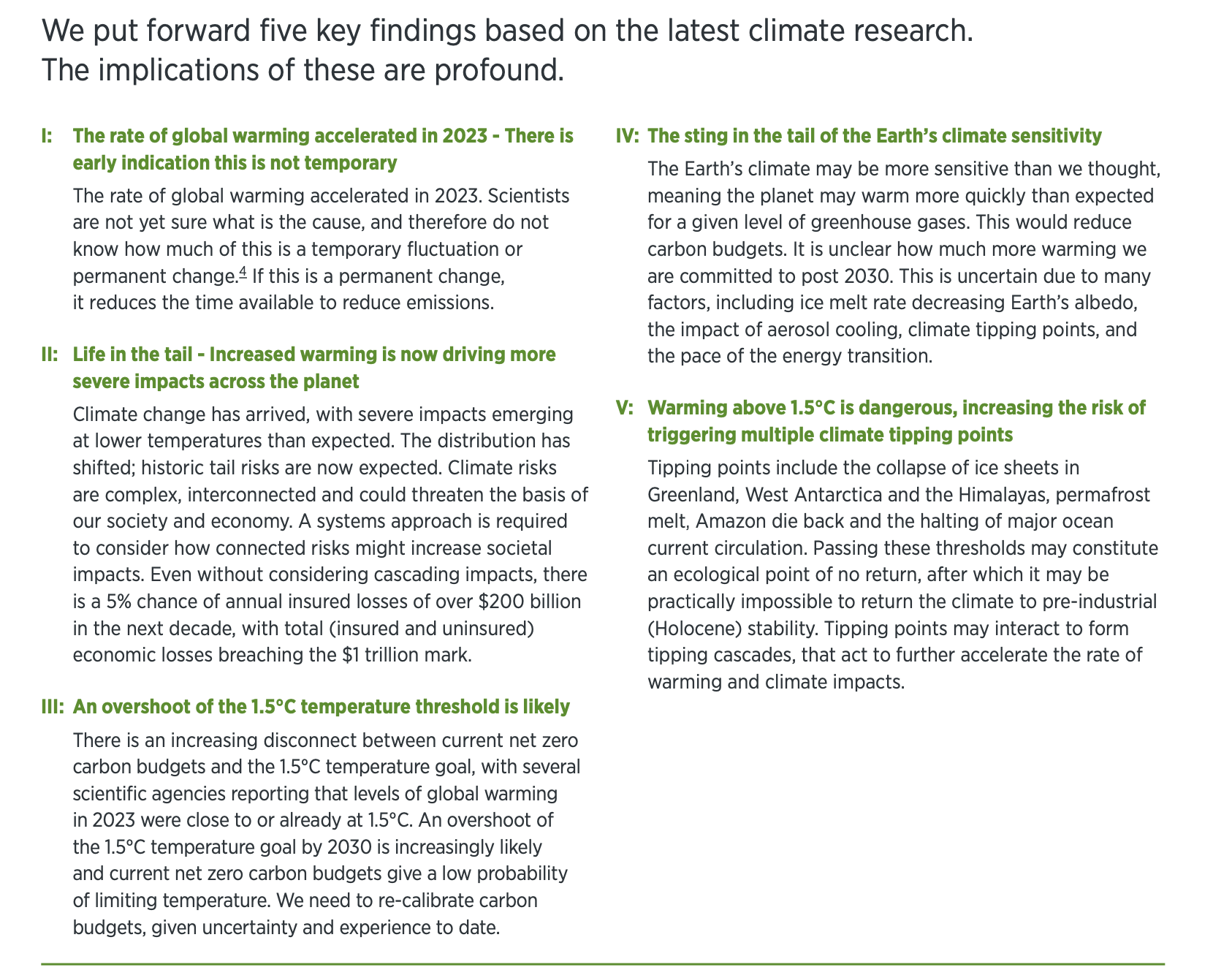

Sorry, this is going to be a bit of a major download, but I think that we should all be conversant. So below are the key findings from the report:

This is super powerful stuff. Why is it so important? It’s among the first waves of research to really try to examine not just how close we are to planetary boundaries, but to their effect on…societies, civilization, us.

What the report says is profound and pretty alarming. Let me sum it up and translate.

First, climate change appears to be accelerating—we’ll come back to that in just a moment.

Second, and this point is crucial, risk is nonlinear, meaning that more warming results in more catastrophic impacts. Think of yesterday’s wildfires, versus, now, in the summer, much of Canada on fire.

Third and fourth, we’re not going to limit warming to 1.5 degrees, the old international target, and in that sense, we’re failing at limiting warming. But—and again, this is key—the earth is “more sensitive” than we thought, meaning that it’s more easy to “perturb,” in complexity theory terms, or in plainer English, to destabilize and turn haywire.

Fifth, because of all that, we’re cruising towards hitting all the planetary tipping points we know of, all of which of course accelerate warming that much further.

Like I said, that’s a lot. But it’s a fantastic summary of where we are—the best one probably so far, both accurate, concise, and containing all the key conclusions, findings, and facts.

The World is Now Made of Tail Risk

The report goes on to talk about “tail risk.” If you’re not a big math or finance or stats nerd, that just means: rare events can have extreme magnitudes. So think of a “once in a thousand year” storm or fire or flood—that’s tail risk. But what’s happening to us now is that tail risk is becoming central, because of course what climate change does is “put the sting in the tail,” as the report says.

All of that’s how you probably think about climate change intuitively, and if it’s not, it’s how you should. It doesn’t take a genius, really, to reach those conclusions, and I think that many people who feel deeply distressed about the future (and the present) have a kind of innate grasp on all that. Their mental model already goes like this: warming accelerating, tipping points approaching if not already being hit, warming accelerates further, catastrophe events become more common. In other words, most of us already grasp “tail risk,” and it worries the hell out of us, because it looks a whole lot like the world is now made of tail risk.

Think about another form of tail risk, after all. Let’s call it “political tail risk.” Once a century, or maybe half century, a fascist movement arises, and wreaks havoc. But now what we’re seeing is the simultaneous rise of the far right across the globe. That’s kind of the stuff of political nightmares—after all, democracy’s already halved to just 20% of the world, since that wave exploded, so how low is it going to go? We now live in a world dominated by political tail risk.

As in: will Trump get re-elected? It used to be a fantastical idea, but now it seems to be coming true. And who knows what lunatic will rise to power in what country next. These sorts of once extreme events are becoming common politically, too.

Let’s do one more quick example. Can you think of forms of social tail risk? I can. Think of the way that it used to be exceedingly rare for a generation to do worse than its forebears. So much so that, at least in the modern era, we didn’t used to see it really ever happen. Generations may have had periods of relative decline, like the Great Depression, but the upwards trajectory across generations was reliable and constant.

And yet now we live in a world facing systemic, chronic downward mobility. Few people expect their kids or grandkids to do better than them, and that’s true in America, Europe, China, and beyond. Gen Z’s numb with trauma, and China’s young people are “lying flat,” or fatalistic and resigned to stagnation. This is a kind of intergenerational tail risk coming true, and it’s frightening to observe, at least for those of us who care about history, progress, and prosperity.

Here’s yet another form of social tail risk. Think of how…relatively…uncommon…it used to be to meet people who believed in outlandish conspiracy theories. Remember the, I don’t know, X-Files? That was a whole TV show that made it big precisely because it was once weird. But now? The Republican Party is making people take loyalty tests that ask if they believe the last election was stolen. Conspiratorialism and other associated forms of lunacy are that commonplace, and you really never know what someone you strike up a random conversation with might believe that once used to be…

Extreme. We might still call beliefs like this extreme, from “the election was stolen,” to “those dirty people aren’t even people!,” but the problem is that more and more people seem to share them, or at least back them. So extremism isn’t really rare anymore—it’s become more and more commonplace, surrounding us, permeating us, social media and whatnot radicalizing masses en masse, all of which is exactly what tail risk is—the extreme becoming more and more common—and in that sense, this is a form of social tail risk coming true.

A world of tail risk, I might add, is a scary and alien place. To all of us. Not just those who’ve been driven out of their minds and turned to extremism. That happened precisely because tail risk, destabilization, is so scary, that these sorts have turned to strongmen and scapegoating for purpose, stability, and meaning. So we could also well say that tail risk itself appears to be a kind of positive feedback system, a self-perpetuating process, a vicious circle.

Is AI Already Making Us Dumber?

Here’s another bit of recent research that really struck me. It’s one of the first studies to investigate whether AI is making us…dumber. What did it find? It’s sort of funny, almost, so enjoy some comic relief to the Vibes of Doom above. Check it out:

Not surprisingly, use of ChatGPT was likely to develop tendencies for procrastination and memory loss and dampen the students’ academic performance. Finally, academic workload, time pressure, and sensitivity to rewards had indirect effects on students’ outcomes through ChatGPT usage.”

Like I said, sort of comical, right? The researchers went out and surveyed these poor students, and what they found was straightforward, clear, and just what you might expect. Using AI made those kids more prone to procrastination, memory loss, and “dampened their academic performance,” which is a Very Polite Way to say, in plain English, made them dumber.

Chuckle if you must, I certainly did, because, well, what else would you expect? My lovely wife the doctor was grading some papers last week, and she was howling in pain. “What’s wrong,” I asked, concerned. It was the first year her medical students had begun to use AI in earnest, and the papers were…terrible. Just shockingly bad. Not only was it pretty obvious who’d used AI where, and for what, but the results were often wrong. Not good for tomorrow’s future…doctors. We should want them to know their stuff down to a T.

I often wonder these days what the point of AI is. I imagine that one answer to that is easy: money. Business is completely obsessed with AI to the point of it being the Biggest Business Buzzword Ever, to a degree that’s ludicrous—Google’s CEO just called it the equivalent of the discovery of fire. Go ahead and roll your eyes, I certainly did.

The point of AI should be distinguished from the effects of AI. If the point of AI is money—let’s cut these jobs, and hand them to the bots! Hurray, we saved a few million dollars, and that means we can keep our own executive jobs a little while longer while the economy continues going to hell, and I’m not kidding about that, think about how many layoffs there have been recently—then of course the effects of AI will be that much the worse. Because when the point of a thing is money, and just money, well, history’s pretty clear on how bubbles of that sort turn out.

I imagine that AI will be to tomorrow what social media is to today. I don’t think AI’s going to yield planet-killing robots (who needs those, remember the research from the actuaries above?). But I do think it’ll create a kind of low-level toxicity, in the same way that social media has left kids (and people) today stressed, depressed, and numb. AI is probably going to do exactly what the research above says, which is steal parts of our humanity from us—our intellect, creativity, knowledge, even our morality, as we come to rely on it to mediate everything from shopping to relationships to information.

It’s funny how these things cost us the very thing they promised. Social media cost us sociality, and flattened us all into numbers and avatars, helping destabilize the world. “AI” is probably going to cost us all the kinds of intelligence that we know of, from emotional to social to rational and beyond. That’s a bleak thought, but of course, it’s not an unfounded one, because that’s precisely what the research above shows. I’d bet dollars to donuts that research will be replicated time and again over the coming years, even as the AI lobby pretends that it’s going to Save the World or what have you.

I’m not saying it’s useless, by the way, or that it’s all bad. But I am saying what we discussed in the Divergence posts: “tech” no longer lifts living standards, and now we’re in a very different age. In this one, the questions aren’t gee whiz, look what we can do with our marvelous machines. It’s whether we’re doing anything that matters, or just adding more dystopia to the already poisoned future.

❤️ Don't forget...

📣 Share The Issue on your Twitter, Facebook, or LinkedIn.

💵 If you like our newsletter, drop some love in our tip jar.

📫 Forward this to a friend and tell them all all about it.

👂 Anything else? Send us feedback or say hello!

Member discussion